The Anti-Kyte

Creating a central Repository using Git Bare

When working in a large IT department, there are times when you feel a bit like a Blue Peter presenter.

For example, there may be a requirement to colloborate with other programmers on a project but, for whatever reason, you do not have access to a hosting platform ( Github, Bitbucket, Gitlab – pick you’re favourite).

What you do have is a network share to which you all have access, and Git installed locally on each of your machines.

This can be thought of as the technical equivalent of an empty washing-up bottle, a couple of loo rolls and some sticky back plastic.

Fortunately, this represents the raw materials required to construct a Tracey Island or – in this case – a Bare Git Repo to act as the main repository for the project…

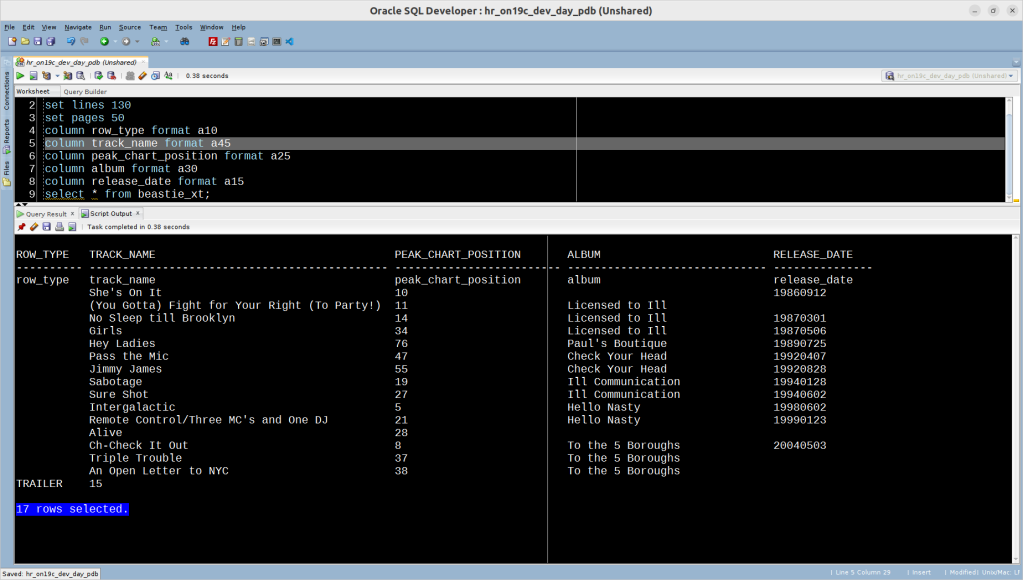

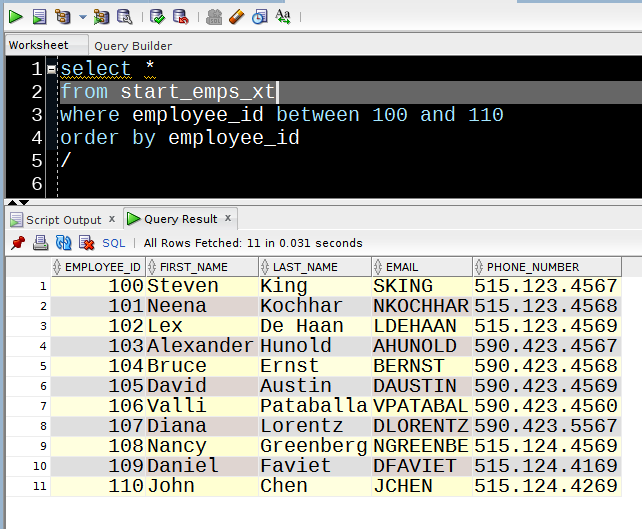

The Repository we want to share looks like this :

To create the main repository on the share, we can open a command window and create the directory to hold the repository on the share ( which is mapped to Z:\ in my case) :

mkdir z:\blue_peter

…then navigate to the new directory and create a Bare Repo …

git init --bare

You can then populate the repo with the existing project.

git remote add origin z:\blue_peter

git push origin main

NOTE – it could be because I was doing this on a VM, but when I first ran the push, I got an error about the ownership of the shared directory :

This can be solved by running :

git config --global --add safe.directory z:blue_peter

Looking at the files in our new main repo, we can see that it’s not shown as individual files, as you’d expect in a normal repo :

However, we can access the contents via Git in the normal way.

For example, I can now clone the repository to a different location. In real-life this would be a completely different client, but I’ve run out of VMs !

git clone z:\blue_peter

Side Note – Once again, I hit the dubious ownership issue :

Anyhow, we can see the files as usual in the cloned repo :

…and the repository now behaves as expected. If we make a change and push it…

We can pull the repo in another “client” :

After all that, I think you’ve earned a Blue Peter Badge.

Oracle External Tables and the External Modify Clause

I like to think that I’m not completely useless in the kitchen. A pinch of this, a dash of that and a glug of what you fancy ( which may or may not make it’s way into whatever I’m cooking) and the result is usually edible at least.

That said, the combination of precise quantities of substances by means of closely controlled chemical reactions is more Deb’s forte.

The result is usually delicious. Being traditionalists in our house, we do like to follow the time-honoured bake-off format and have a judging session of the finished article. We think of it as the Great British Cake Scoff.

However satisfying the act of culinary creation may be, there are times when you just want something you need to stick in the microwave for 2 minutes.

Which brings us to the matter of Oracle External Tables.

When they first arrived, External Tables provided an easy way to load data from a file directly into the database without all that messing about with SQL*Loader.

Of course, there were some limitations. If you wanted to point an external table at a file, you’d have to issue an alter table statement to set it ( and possibly the directory as well).

This meant that External Table access had to be serialized to ensure that it was pointed at the correct file until you were finished with it.

If you find yourself switching between Oracle versions, it’s worth remembering that, these days, things are a little different, thanks to the EXTERNAL MODIFY, which arrived in 12c.

What I’ll be looking at here is whether External tables can now be used concurrently in different sessions, accessing different files.

I’ll also explore the EXTERNAL MODIFY clause’s aversion to bind variables and how we might work around this securely in PL/SQL.

The ApplicationThe examples that follow were run on an Oracle Developer Day Virtual Box instance running Oracle 19.3.

We have a directory…

create or replace directory recipies_dir as '/u01/app/recipies';

…which contains some text files…

ls -1

debs_lemon_drizzle_cake.txt

mikes_beans_on_toast.txt

…and a simple external table to read files…

create table nom_nom_xt

(

line number,

text varchar2(4000)

)

organization external

(

type oracle_loader

default directory upload_files

access parameters

(

records delimited by newline

nologfile

nobadfile

nodiscardfile

fields terminated by '~'

missing field values are null

(

line recnum,

text char(4000)

)

)

location('')

)

reject limit unlimited

/

You’ll notice that I’ve specified the default directory as this is mandatory. However the location (i.e. the target file) is currently null.

Now, in the olden days, we’d have to issue a DDL statement to set the location before we could look at a file.

Since 12c however, we have the EXTERNAL MODIFY clause, so we can do this directly in a query :

select text

from nom_nom_xt

external modify

(

default directory recipies_dir

location('mikes_beans_on_toast.txt')

)

/

Alternatively…

select text

from nom_nom_xt

external modify

(

location(recipies_dir:'debs_lemon_drizzle_cake.txt')

)

/

After running these statments, we can see that the EXTERNAL MODIFY clause has had no effect on the table definition itself :

select directory_name, location

from user_external_locations

where table_name = 'NOM_NOM_XT';

Concurrency

Concurrency

Looking at the EXTERNAL MODIFY clause, it would seem that External Tables should now behave like Global Temporary Tables in that, whilst their structure is permanent, the data they contain is session specific.

Let’s put that to the test.

First of all, I’m going to take advantage of the fact I’m on Linux ( Oracle Linux Server 7.6 since you ask) and generate a text file from /usr/share/dict/words – a file that contains a list of words.

In the recipies directory on the os :

for i in {1..100}; do cat /usr/share/dict/words >>alphabet_soup.txt; done

cat alphabet_soup.txt >alphabetty_spaghetti.txt

I now have two rather chunky text files :

ls -lh alphabet*

-rw-r--r--. 1 oracle oinstall 473M May 27 14:25 alphabet_soup.txt

-rw-r--r--. 1 oracle oinstall 473M May 27 14:26 alphabetty_spaghetti.txt

…containing just under 48 million lines each…

cat alphabet_soup.txt |wc -l

47982800

Using the single external table, I’m going to load each file into a separate table in separate sessions.

The script for session 1 is :

set worksheetname Soup

column start_time format a10

column end_time format a10

-- Check that this is a different session from "session 2"

select sys_context('userenv', 'sessionid') from dual;

-- Give me time to switch sessions and start the other script

exec dbms_session.sleep(2);

select to_char(sysdate, 'HH24:MI:SS') as start_time from dual;

set timing on

create table alphabet_soup as

select *

from nom_nom_xt external modify( default directory recipies_dir location('alphabet_soup.txt'));

set timing off

select to_char(sysdate, 'HH24:MI:SS') as end_time from dual;

select count(*) from alphabet_soup;

In session 2 :

set worksheetname Spaghetti

column start_time format a10

column end_time format a10

-- Check that this is a different session from "session 1"

select sys_context('userenv', 'sessionid') from dual;

select to_char(sysdate, 'HH24:MI:SS') as start_time from dual;

set timing on

create table alphabetty_spaghetti as

select *

from nom_nom_xt external modify( default directory recipies_dir location('alphabetty_spaghetti.txt'));

set timing off

select to_char(sysdate, 'HH24:MI:SS') as end_time from dual;

select count(*) from alphabetty_spaghetti;

Note – the set worksheetname command is SQLDeveloper specific.

The results are…

Session 1 (Soup)SYS_CONTEXT('USERENV','SESSIONID')

------------------------------------

490941

PL/SQL procedure successfully completed.

START_TIME

----------

14:45:08

Table ALPHABET_SOUP created.

Elapsed: 00:01:06.199

END_TIME

----------

14:46:15

COUNT(*)

----------

47982800

Session 2 (Spaghetti)

SYS_CONTEXT('USERENV','SESSIONID')

-----------------------------------

490942

START_TIME

----------

14:45:09

Table ALPHABETTY_SPAGHETTI created.

Elapsed: 00:01:08.043

END_TIME

----------

14:46:17

COUNT(*)

----------

47982800

As we can see, the elapsed time is almost identical in both sessions. More importantly, both sessions’ CTAS statements finished within a couple of seconds of each other.

Therefore, we can conclude that both sessions accessed the External Table in parallel.

Whilst this does represent a considerable advance in the utility of External Tables, there is something of a catch when it comes to using them to access files via SQL*Plus…

Persuading EXTERNAL MODIFY to eat it’s greensA common use case for External Tables tends to be ETL processing. In such circumstances, the name of the file being loaded is likely to change frequently and so needs to be specified at runtime.

It’s also not unusual to have an External Table that you want to use on more than one directory ( e.g. as a log file viewer).

On the face of it, the EXTERNAL MODIFY clause should present no barrier to use in PL/SQL :

clear screen

set serverout on

begin

for r_line in

(

select text

from nom_nom_xt external modify ( default directory recipies_dir location ('debs_lemon_drizzle_cake.txt') )

)

loop

dbms_output.put_line(r_line.text);

end loop;

end;

/

Whilst this works with no problems, look what happens when we try to use a variable to specify the filename :

clear screen

set serverout on

declare

v_file varchar2(4000) := 'debs_lemon_drizzle_cake.txt';

begin

for r_line in

(

select text

from nom_nom_xt

external modify

(

default directory recipies_dir

location (v_file)

)

)

loop

dbms_output.put_line(r_line.text);

end loop;

end;

/

ORA-06550: line 7, column 90:

PL/SQL: ORA-00905: missing keyword

Specifying the directory in a variable doesn’t work either :

declare

v_dir varchar2(4000) := 'recipies_dir';

begin

for r_line in

(

select text

from nom_nom_xt

external modify

(

default directory v_dir

location ('debs_lemon_drizzle_cake.txt')

)

)

loop

dbms_output.put_line(r_line.text);

end loop;

end;

/

ORA-06564: object V_DIR does not exist

Just in case you’re tempted to solve this by doing something simple like :

clear screen

set serverout on

declare

v_dir all_directories.directory_name%type := 'recipies_dir';

v_file varchar2(100) := 'mikes_beans_on_toast.txt';

v_stmnt clob :=

q'[

select text

from nom_nom_xt external modify( default directory <dir> location('<file>'))

]';

v_rc sys_refcursor;

v_text varchar2(4000);

begin

v_stmnt := replace(replace(v_stmnt, '<dir>', v_dir), '<file>', v_file);

open v_rc for v_stmnt;

loop

fetch v_rc into v_text;

exit when v_rc%notfound;

dbms_output.put_line(v_text);

end loop;

close v_rc;

end;

/

You should be aware that this approach is vulnerable to SQL Injection.

I know that it’s become fashionable in recent years for “Security” to be invoked as a reason for all kinds of – often questionable – restrictions on the hard-pressed Software Engineer.

So, just in case you’re sceptical about this, here’s a quick demo :

clear screen

set serverout on

declare

v_dir varchar2(500) :=

q'[recipies_dir location ('mikes_beans_on_toast.txt')) union all select username||' '||account_status||' '||authentication_type from dba_users --]';

v_file varchar2(100) := 'mikes_beans_on_toast.txt';

v_stmnt varchar2(4000) := q'[select text from nom_nom_xt external modify (default directory <dir> location ('<file>'))]';

v_rc sys_refcursor;

v_text varchar2(4000);

begin

v_stmnt := replace(replace(v_stmnt, '<dir>', v_dir), '<file>', v_file);

open v_rc for v_stmnt;

loop

fetch v_rc into v_text;

exit when v_rc%notfound;

dbms_output.put_line(v_text);

end loop;

close v_rc;

end;

/

bread

baked beans

butter

SYS OPEN PASSWORD

SYSTEM OPEN PASSWORD

XS$NULL EXPIRED & LOCKED PASSWORD

HR OPEN PASSWORD

...snip...

PL/SQL procedure successfully completed.

If we resort to Dynamic SQL, we can pass the filename into the query as a bind variable :

set serverout on

declare

v_file varchar2(4000) := 'debs_lemon_drizzle_cake.txt';

v_stmnt clob :=

'select text from nom_nom_xt external modify( default directory recipies_dir location (:v_file))';

v_rc sys_refcursor;

v_text varchar2(4000);

v_rtn number;

begin

open v_rc for v_stmnt using v_file;

loop

fetch v_rc into v_text;

exit when v_rc%notfound;

dbms_output.put_line( v_text);

end loop;

close v_rc;

end;

/

…or, if you prefer…

clear screen

set serverout on

declare

v_file varchar2(120) := 'debs_lemon_drizzle_cake.txt';

v_stmnt clob := q'[select text from nom_nom_xt external modify( default directory recipies_dir location (:b1))]';

v_rc sys_refcursor;

v_text varchar2(4000);

v_curid number;

v_rtn number;

begin

v_curid := dbms_sql.open_cursor;

dbms_sql.parse(v_curid, v_stmnt, dbms_sql.native);

dbms_sql.bind_variable(v_curid, 'b1', v_file);

v_rtn := dbms_sql.execute(v_curid);

v_rc := dbms_sql.to_refcursor(v_curid);

loop

fetch v_rc into v_text;

exit when v_rc%notfound;

dbms_output.put_line( v_text);

end loop;

close v_rc;

end;

/

225g unsalted butter

225g caster sugar

4 free-range eggs

225g self-raising flour

1 unwaxed lemon

85g icing sugar

PL/SQL procedure successfully completed.

However, Oracle remains rather recalcitrant when you try doing the same with the default directory.

RTFM RTOB ( Read the Oracle Base article) !After a number of “glugs” from a bottle of something rather expensive whilst trawling through the Oracle Documentation for some clues, I happened to look at the Oracle Base article on this topic which notes that you cannot use bind variables when specifying the Default Directory.

One possible workaround would be to create one external table for each directory that you want to look at.

Alternatively, we can sanitize the incoming value for the DEFAULT DIRECTORY before we drop it into our query.

To this end, DBMS_ASSERT is not going to be much help.

The SQL_OBJECT_NAME function does not recognize Directory Objects…

select dbms_assert.sql_object_name('recipies_dir') from dual;

ORA-44002: invalid object name

… and the SIMPLE_SQL_NAME function will allow pretty much anything if it’s quoted…

select dbms_assert.simple_sql_name(

q'["recipies_dir location('mikes_beans_on_toast.txt')) union all select username from dba_users --"]')

from dual;

DBMS_ASSERT.SIMPLE_SQL_NAME(Q'["RECIPIES_DIRLOCATION('MIKES_BEANS_ON_TOAST.TXT'))UNIONALLSELECTUSERNAMEFROMDBA_USERS--"]')

---------------------------------------------------------------------------

"recipies_dir location('mikes_beans_on_toast.txt')) union all select username from dba_users --"

Time then, to unplug the microwave and cook up something home-made…

I’m running on 19c so I know that :

- A directory object name should be a maximum of 128 characters long

- the name will conform to the Database Object Naming Rules

Additionally, I’m going to assume that we’re following Oracle’s recommendation that quoted identifiers are not used for database object names (including Directories). You can find that pearl of wisdom in the page linked above.

Finally, I want to make sure that a user only accesses a valid directory object on which they have appropriate permissions.

Something like this should get us most of the way :

set serverout on

clear screen

declare

v_dir varchar2(4000) := 'recipies_dir';

v_file varchar2(4000) := 'debs_lemon_drizzle_cake.txt';

v_stmnt clob :=

'select text from nom_nom_xt external modify( default directory <dir> location (:v_file))';

v_rc sys_refcursor;

v_text varchar2(4000);

v_rtn number;

v_placeholder pls_integer;

v_found_dir boolean;

cursor c_valid_dir is

select null

from all_directories

where directory_name = upper(v_dir);

begin

if length( v_dir) > 128 then

raise_application_error(-20101, 'Directory Identifier is too long');

end if;

-- Assume allowable characters are alphanumeric and underscore. Reject if it contains anything else

if regexp_instr(replace(v_dir, '_'), '[[:punct:]]|[[:space:]]') > 0 then

raise_application_error(-20110, 'Directory Name contains wacky characters');

end if;

open c_valid_dir;

fetch c_valid_dir into v_placeholder;

v_found_dir := c_valid_dir%found;

close c_valid_dir;

if v_found_dir = false then

raise_application_error(-20120, 'Directory not found');

end if;

v_stmnt := replace(v_stmnt, '<dir>', v_dir);

open v_rc for v_stmnt using v_file;

loop

fetch v_rc into v_text;

exit when v_rc%notfound;

dbms_output.put_line( v_text);

end loop;

close v_rc;

end;

/

We can now convert this into an Invoker’s rights package, that should restrict access to directories visible by the calling user :

create or replace package peckish

authid current_user

as

type t_nom is table of varchar2(4000);

procedure validate_directory(i_dir in varchar2);

function recipe( i_dir in varchar2, i_file in varchar2)

return t_nom pipelined;

end peckish;

/

create or replace package body peckish as

procedure validate_directory( i_dir in varchar2)

is

v_placeholder pls_integer;

v_found_dir boolean;

cursor c_valid_dir is

select null

from all_directories

where directory_name = upper(i_dir);

begin

if length( i_dir) > 128 then

raise_application_error(-20101, 'Directory Identifier is too long');

end if;

if regexp_instr(replace(i_dir, '_'), '[[:punct:]]|[[:space:]]') > 0 then

raise_application_error(-20110, 'Directory Name contains wacky characters');

end if;

open c_valid_dir;

fetch c_valid_dir into v_placeholder;

v_found_dir := c_valid_dir%found;

close c_valid_dir;

if v_found_dir = false then

raise_application_error(-20120, 'Directory not found');

end if;

end validate_directory;

function recipe( i_dir in varchar2, i_file in varchar2)

return t_nom pipelined

is

v_nom nom_nom_xt%rowtype;

v_stmnt clob :=

'select line, text from nom_nom_xt external modify( default directory <dir> location (:v_file))';

v_rc sys_refcursor;

v_text varchar2(4000);

begin

validate_directory(i_dir);

v_stmnt := replace(v_stmnt, '<dir>', i_dir);

open v_rc for v_stmnt using i_file;

loop

fetch v_rc into v_nom.line, v_nom.text;

exit when v_rc%notfound;

pipe row( v_nom);

end loop;

close v_rc;

end recipe;

end peckish;

/

Let’s run some tests :

select line as line_no, text as ingredient

from table(peckish.recipe('recipies_dir', 'debs_lemon_drizzle_cake.txt'))

/

LINE_NO INGREDIENT

---------- ----------------------------------------

1 225g unsalted butter

2 225g caster sugar

3 4 free-range eggs

4 225g self-raising flour

5 1 unwaxed lemon

6 85g icing sugar

6 rows selected.

select text as ingredient

from table (

peckish.recipe(

'this_is_a_very_long_identifier_to_check_that_the_length_restriction_works_as_expected._Is_that_128_characters_yet_?_Apparently_not_Oh_well_lets_keep_going_for_a_bit',

'mikes_beans_on_toast.txt'

))

/

ORA-20101: Directory Identifier is too long

select text as ingredient

from table(

peckish.recipe

(

q'["recipies_dir location('mikes_beans_on_toast.txt')) union all select username from dba_users --"]',

'debs_lemon_drizzle_cake.txt'

))

/

ORA-20110: Directory Name contains wacky characters

select text as ingredient

from table (peckish.recipe('super_secret_dir', 'mikes_beans_on_toast.txt'))

/

ORA-20120: Directory not found

All of which has left me feeling rather in the mood for a snack. I wonder if there’s any of that cake left ?

Freddie Starr Ate My File ! Finding out exactly what the Oracle Filewatcher is up to

As useful as they undoubtedly are, any use of a DBMS_SCHEDULER File Watchers in Oracle is likely to involve a number of moving parts.

This can make trying to track down issues feel a bit like being on a hamster wheel.

Fortunately, you can easily find out just exactly what the filewatcher is up to, if you know where to look …

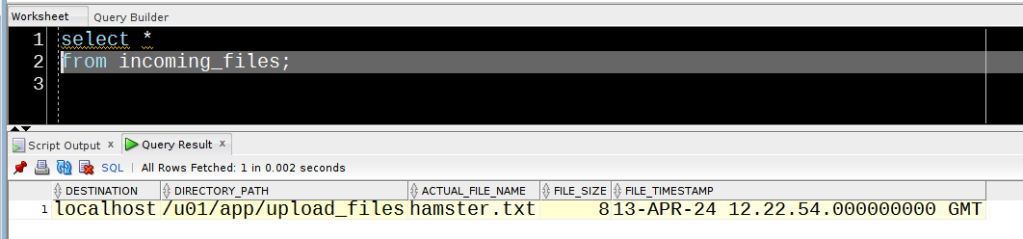

I’ve got a procedure to populate a table with details of any arriving file.

create table incoming_files(

destination VARCHAR2(4000),

directory_path VARCHAR2(4000),

actual_file_name VARCHAR2(4000),

file_size NUMBER,

file_timestamp TIMESTAMP WITH TIME ZONE)

/

create or replace procedure save_incoming_file( i_result sys.scheduler_filewatcher_result)

as

begin

insert into incoming_files(

destination,

directory_path,

actual_file_name,

file_size,

file_timestamp)

values(

i_result.destination,

i_result.directory_path,

i_result.actual_file_name,

i_result.file_size,

i_result.file_timestamp);

end;

/

The filewatcher and associated objects that will invoke this procedure are :

begin

dbms_credential.create_credential

(

credential_name => 'starr',

username => 'fstarr',

password => 'some-complex-password'

);

end;

/

begin

dbms_scheduler.create_file_watcher(

file_watcher_name => 'freddie',

directory_path => '/u01/app/upload_files',

file_name => '*.txt',

credential_name => 'starr',

enabled => false,

comments => 'Feeling peckish');

end;

/

begin

dbms_scheduler.create_program(

program_name => 'snack_prog',

program_type => 'stored_procedure',

program_action => 'save_incoming_file',

number_of_arguments => 1,

enabled => false);

-- need to make sure this program can see the message sent by the filewatcher...

dbms_scheduler.define_metadata_argument(

program_name => 'snack_prog',

metadata_attribute => 'event_message',

argument_position => 1);

-- Create a job that links the filewatcher to the program...

dbms_scheduler.create_job(

job_name => 'snack_job',

program_name => 'snack_prog',

event_condition => null,

queue_spec => 'freddie',

auto_drop => false,

enabled => false);

end;

/

The relevant components have been enabled :

begin

dbms_scheduler.enable('freddie');

dbms_scheduler.enable('snack_prog');

dbms_scheduler.enable('snack_job');

end;

/

… and – connected on the os as fstarr – I’ve dropped a file into the directory…

echo 'Squeak!' >/u01/app/upload_files/hamster.txtFile watchers are initiated by a scheduled run of the SYS FILE_WATCHER job.

The logging_level value determines whether or not the executions of this job will be available in the *_SCHEDULER_JOB_RUN_DETAILS views.

select program_name, schedule_name,

job_class, logging_level

from dba_scheduler_jobs

where owner = 'SYS'

and job_name = 'FILE_WATCHER'

/

PROGRAM_NAME SCHEDULE_NAME JOB_CLASS LOGGING_LEVEL

-------------------- ------------------------- ----------------------------------- ---------------

FILE_WATCHER_PROGRAM FILE_WATCHER_SCHEDULE SCHED$_LOG_ON_ERRORS_CLASS FULL

If the logging_level is set to OFF (which appears to be the default in 19c), you can enable it by connecting as SYSDBA and running :

begin

dbms_scheduler.set_attribute('FILE_WATCHER', 'logging_level', dbms_scheduler.logging_full);

end;

/

The job is assigned the FILE_WATCHER_SCHEDULE, which runs every 10 minutes by default. To check the current settings :

select repeat_interval

from dba_scheduler_schedules

where schedule_name = 'FILE_WATCHER_SCHEDULE'

/

REPEAT_INTERVAL

------------------------------

FREQ=MINUTELY;INTERVAL=10

The thing is, there are times when the SYS.FILE_WATCHER seems to slope off for a tea-break. So, if you’re wondering why your file has not been processed yet, it’s handy to be able to check if this job has run when you expected it to.

In this case, as logging is enabled, we can do just that :

select log_id, log_date, instance_id, req_start_date, actual_start_date

from dba_scheduler_job_run_details

where owner = 'SYS'

and job_name = 'FILE_WATCHER'

and log_date >= sysdate - (1/24)

order by log_date desc

/

LOG_ID LOG_DATE INSTANCE_ID REQ_START_DATE ACTUAL_START_DATE

------- ----------------------------------- ----------- ------------------------------------------ ------------------------------------------

1282 13-APR-24 14.50.47.326358000 +01:00 1 13-APR-24 14.50.47.000000000 EUROPE/LONDON 13-APR-24 14.50.47.091753000 EUROPE/LONDON

1274 13-APR-24 14.40.47.512172000 +01:00 1 13-APR-24 14.40.47.000000000 EUROPE/LONDON 13-APR-24 14.40.47.075846000 EUROPE/LONDON

1260 13-APR-24 14.30.47.301176000 +01:00 1 13-APR-24 14.30.47.000000000 EUROPE/LONDON 13-APR-24 14.30.47.048977000 EUROPE/LONDON

1248 13-APR-24 14.20.47.941210000 +01:00 1 13-APR-24 14.20.47.000000000 EUROPE/LONDON 13-APR-24 14.20.47.127769000 EUROPE/LONDON

1212 13-APR-24 14.10.48.480193000 +01:00 1 13-APR-24 14.10.47.000000000 EUROPE/LONDON 13-APR-24 14.10.47.153032000 EUROPE/LONDON

1172 13-APR-24 14.00.50.676270000 +01:00 1 13-APR-24 14.00.47.000000000 EUROPE/LONDON 13-APR-24 14.00.47.111936000 EUROPE/LONDON

6 rows selected.

Even if the SYS.FILE_WATCHER is not logging, when it does run, any files being watched for are added to a queue, the contents of which can be found in SCHEDULER_FILEWATCHER_QT.

This query will get you the really useful details of what your filewatcher has been up to :

select

t.step_no,

treat( t.user_data as sys.scheduler_filewatcher_result).actual_file_name as filename,

treat( t.user_data as sys.scheduler_filewatcher_result).file_size as file_size,

treat( t.user_data as sys.scheduler_filewatcher_result).file_timestamp as file_ts,

t.enq_time,

x.name as filewatcher,

x.requested_file_name as search_pattern,

x.credential_name as credential_name

from sys.scheduler_filewatcher_qt t,

table(t.user_data.matching_requests) x

where enq_time > trunc(sysdate)

order by enq_time

/

STEP_NO FILENAME FILE_SIZE FILE_TS ENQ_TIME FILEWATCHER SEARCH_PATTERN CREDENTIAL_NAME

---------- --------------- ---------- -------------------------------- ---------------------------- --------------- --------------- ---------------

0 hamster.txt 8 13-APR-24 12.06.58.000000000 GMT 13-APR-24 12.21.31.746338000 FREDDIE *.txt STARR

Happily, in this case, our furry friend has avoided the Grim Squaker…

NOTE – No hamsters were harmed in the writing of this post.

If you think I’m geeky, you should meet my friend.

I’d like to talk about a very good friend of mine.

Whilst he’s much older than me ( 11 or 12 weeks at least), we do happen to share interests common to programmers of a certain vintage.

About a year ago, he became rather unwell.

Since then, whenever I’ve gone to visit, I’ve taken care to wear something that’s particular to our friendship and/or appropriately geeky.

At one point, when things were looking particularly dicey, I promised him, that whilst “Captain Scarlet” was already taken, if he came through he could pick any other colour he liked.

As a life-long Luton Town fan, his choice was somewhat inevitable.

So then, what follows – through the medium of Geeky T-shirts – is a portrait of my mate Simon The Indestructable Captain Orange…

When we first met, Windows 3.1 was still on everyone’s desktop and somewhat prone to hanging at inopportune moments. Therefore, we are fully aware of both the origins and continuing relevance of this particular pearl of wisdom :

Fortunately, none of the machines Simon was wired up to in the hospital seemed to be running any version of Windows so I thought he’d be reassured by this :

Whilst our first meeting did not take place on a World riding through space on the back of a Giant Turtle ( it was in fact, in Milton Keynes), Simon did earn my eternal gratitude by recommending the book Good Omens – which proved to be my gateway to Discworld.

The relevance of this next item of “Geek Chic” is that, when Simon later set up his own company, he decided that it should have a Latin motto.

In this, he was inspired by the crest of the Ankh-Morpork Assassins’ Guild :

His motto :

Nil codex sine Lucre

…which translates as …

No code without payment

From mottoes to something more akin to a mystic incantation, chanted whenever you’re faced with a seemingly intractable technical issue. Also, Simon likes this design so…

As we both know, there are 10 types of people – those who understand binary and those who don’t…

When confronted by something like this, I am able to recognise that the binary numbers are ASCII codes representing alphanumeric characters. However, I’ve got nothing on Simon, a one-time Assembler Programmer.

Whilst I’m mentally removing my shoes and socks in preparation to translate the message, desperately trying to remember the golden rule of binary maths ( don’t forget to carry the 1), he’ll just come straight out with the answer (“Geek”, in this case).

Saving the geekiest to last, I’m planning to dazzle with this on my next visit :

Techie nostalgia and a Star Wars reference all on the one t-shirt. I don’t think I can top that. Well, not for now anyway.

Using the APEX_DATA_EXPORT package directly from PL/SQL

As Data Warehouse developers, there is frequently a need for us to produce user reports in a variety of formats (ok, Excel).

Often these reports are the output of processes running as part of an unattended batch.

In the past I’ve written about some of the solutions out there for creating csv files and, of course Excel.

The good news is that, since APEX 20.2, Oracle provides the ability to do this out-of-the-box by means of the APEX_DATA_EXPORT PL/SQL package.

The catch is that you need to have an active APEX session to call it.

Which means you need to have an APEX application handy.

Fortunately, it is possible to initiate an APEX session and call this package without going anywhere near the APEX UI itself, as you’ll see shortly.

Specfically what we’ll cover is :

- generating comma-separated (CSV) output

- generating an XLSX file

- using the ADD_AGGREGATE procedure to add a summary

- using the ADD_HIGHLIGHT procedure to apply conditional formatting

- using the GET_PRINT_CONFIG function to apply document-wide styling

Additionally, we’ll explore how to create a suitable APEX Application from a script if one is not already available.

Incidentally, the scripts in this post can be found in this Github Repo.

Before I go any further, I should acknowledge the work of Amirreza Rastandeh, who’s LinkedIn article inspired this post.

A quick word on the Environment I used in these examples – it’s an Oracle supplied VirtualBox appliance running Oracle 23c Free Database and APEX 22.2.

Generating CSV outputAPEX_DATA_EXPORT offers a veritable cornucopia of output formats. However, to begin with, let’s keep things simple and just generate a CSV into a CLOB object so that we can check the contents directly from within a script.

We will need to call APEX_SESSION.CREATE_SESSION and pass it some details of an Apex application in order for this to work so the first thing we need to do is to see if we have such an application available :

select workspace, application_id, page_id, page_name

from apex_application_pages

order by page_id

/

WORKSPACE APPLICATION_ID PAGE_ID PAGE_NAME

------------------------------ -------------- ---------- --------------------

HR_REPORT_FILES 105 0 Global Page

HR_REPORT_FILES 105 1 Home

HR_REPORT_FILES 105 9999 Login Page

As long as we get at least one row back from this query, we’re good to go. Now for the script itself (called csv_direct.sql) :

set serverout on size unlimited

clear screen

declare

cursor c_apex_app is

select ws.workspace_id, ws.workspace, app.application_id, app.page_id

from apex_application_pages app

inner join apex_workspaces ws

on ws.workspace = app.workspace

order by page_id;

v_apex_app c_apex_app%rowtype;

v_stmnt varchar2(32000);

v_context apex_exec.t_context;

v_export apex_data_export.t_export;

begin

dbms_output.put_line('Getting app details...');

-- We only need the first record returned by this cursor

open c_apex_app;

fetch c_apex_app into v_apex_app;

close c_apex_app;

apex_util.set_workspace(v_apex_app.workspace);

dbms_output.put_line('Creating session');

apex_session.create_session

(

p_app_id => v_apex_app.application_id,

p_page_id => v_apex_app.page_id,

p_username => 'anynameyoulike' -- this parameter is mandatory but can be any string apparently

);

v_stmnt := 'select * from departments';

dbms_output.put_line('Opening context');

v_context := apex_exec.open_query_context

(

p_location => apex_exec.c_location_local_db,

p_sql_query => v_stmnt

);

dbms_output.put_line('Running Report');

v_export := apex_data_export.export

(

p_context => v_context,

p_format => 'CSV', -- patience ! We'll get to the Excel shortly.

p_as_clob => true -- by default the output is saved as a blob. This overrides to save as a clob

);

apex_exec.close( v_context);

dbms_output.put_line(v_export.content_clob);

end;

/

Running this we get :

Getting app details...

Creating session

Opening context

Running Report

DEPARTMENT_ID,DEPARTMENT_NAME,MANAGER_ID,LOCATION_ID

10,Administration,200,1700

20,Marketing,201,1800

30,Purchasing,114,1700

40,Human Resources,203,2400

50,Shipping,121,1500

60,IT,103,1400

70,Public Relations,204,2700

80,Sales,145,2500

90,Executive,100,1700

100,Finance,108,1700

110,Accounting,205,1700

120,Treasury,,1700

130,Corporate Tax,,1700

140,Control And Credit,,1700

150,Shareholder Services,,1700

160,Benefits,,1700

170,Manufacturing,,1700

180,Construction,,1700

190,Contracting,,1700

200,Operations,,1700

210,IT Support,,1700

220,NOC,,1700

230,IT Helpdesk,,1700

240,Government Sales,,1700

250,Retail Sales,,1700

260,Recruiting,,1700

270,Payroll,,1700

PL/SQL procedure successfully completed.

We can get away with using DBMS_OUTPUT as the result set is comparatively small. Under normal circumstances, you’ll probably want to save it into a table ( as in Amirreza’s post), or write it out to a file.

In fact, writing to a file is exactly what we’ll be doing with the Excel output we’ll generate shortly.

First though, what if you don’t have a suitable APEX application lying around…

Creating an APEX application from SQLNOTE – you only need to do this if you do not already have a suitable APEX Application available.

If you do then feel free to skip to the next bit, where we finally start generating XLSX files !

First, we need to check to see if there is an APEX workspace present for us to create the Application in :

select workspace, workspace_id

from apex_workspaces;

If this returns any rows then you should be OK to pick one of the workspaces listed and create your application in that.

Otherwise, you can create a Workspace by connecting to the database as a user with the APEX_ADMINISTRATOR_ROLE and running :

exec apex_instance_admin.add_workspace( p_workspace => 'HR_REPORT_FILES', p_primary_schema => 'HR');…where HR_REPORT_FILES is the name of the workspace you want to create and HR is a schema that has access to the database objects you want to run your reports against.

Next, following Jeffrey Kemp’s sage advice ,

I can just create and then export an Application on any compatible APEX instance and then simply import the resulting file.

That’s right, it’ll work irrespective of the environment on which the export file is created, as long as it’s a compatible APEX version.

If you need them, the instructions for exporting an APEX application are here.

I’ve just clicked through the Create Application Wizard to produce an empty application using the HR schema and have then exported it to a file called apex22_hr_report_files_app.sql.

To import it, I ran the following script:

begin

apex_application_install.set_workspace('HR_REPORT_FILES');

apex_application_install.generate_application_id;

apex_application_install.generate_offset;

end;

/

@/home/mike/Downloads/apex22_hr_report_files_app.sql

Once it’s run we can confirm that the import was successful :

select application_id, application_name,

page_id, page_name

from apex_application_pages

where workspace = 'HR_REPORT_FILES'

/

APPLICATION_ID APPLICATION_NAME PAGE_ID PAGE_NAME

-------------- -------------------- ---------- --------------------

105 Lazy Reports 0 Global Page

105 Lazy Reports 1 Home

105 Lazy Reports 9999 Login Page

The add_workspace.sql script in the Github Repo executes both the create workspace and application import steps described here.

Right, where were we…

Generating and Excel fileFirst we’ll need a directory object so we can write our Excel file out to disk. So, as a suitably privileged user :

create or replace directory hr_reports as '/opt/oracle/hr_reports';

grant read, write on directory hr_reports to hr;

OK – now to generate our report as an Excel…

set serverout on size unlimited

clear screen

declare

cursor c_apex_app is

select ws.workspace_id, ws.workspace, app.application_id, app.page_id

from apex_application_pages app

inner join apex_workspaces ws

on ws.workspace = app.workspace

order by page_id;

v_apex_app c_apex_app%rowtype;

v_stmnt varchar2(32000);

v_context apex_exec.t_context;

v_export apex_data_export.t_export;

-- File handling variables

v_dir all_directories.directory_name%type := 'HR_REPORTS';

v_fname varchar2(128) := 'hr_departments_reports.xlsx';

v_fh utl_file.file_type;

v_buffer raw(32767);

v_amount integer := 32767;

v_pos integer := 1;

v_length integer;

begin

open c_apex_app;

fetch c_apex_app into v_apex_app;

close c_apex_app;

apex_util.set_workspace(v_apex_app.workspace);

v_stmnt := 'select * from departments';

apex_session.create_session

(

p_app_id => v_apex_app.application_id,

p_page_id => v_apex_app.page_id,

p_username => 'whatever' -- any string will do !

);

-- Create the query context...

v_context := apex_exec.open_query_context

(

p_location => apex_exec.c_location_local_db,

p_sql_query => v_stmnt

);

-- ...and export the data into the v_export object

-- this time use the default - i.e. export to a BLOB, rather than a CLOB

v_export := apex_data_export.export

(

p_context => v_context,

p_format => apex_data_export.c_format_xlsx -- XLSX

);

apex_exec.close( v_context);

dbms_output.put_line('Writing file');

-- Now write the blob out to an xlsx file

v_length := dbms_lob.getlength( v_export.content_blob);

v_fh := utl_file.fopen( v_dir, v_fname, 'wb', 32767);

while v_pos <= v_length loop

dbms_lob.read( v_export.content_blob, v_amount, v_pos, v_buffer);

utl_file.put_raw(v_fh, v_buffer, true);

v_pos := v_pos + v_amount;

end loop;

utl_file.fclose( v_fh);

dbms_output.put_line('File written to HR_REPORTS');

exception when others then

dbms_output.put_line(sqlerrm);

if utl_file.is_open( v_fh) then

utl_file.fclose(v_fh);

end if;

end;

/

As you can see, this script is quite similar to the csv version. The main differences are that, firstly, we’re saving the output as a BLOB rather than a CLOB, simply by not overriding the default behaviour when calling APEX_DATA_EXPORT.EXPORT.

Secondly, we’re writing the result out to a file.

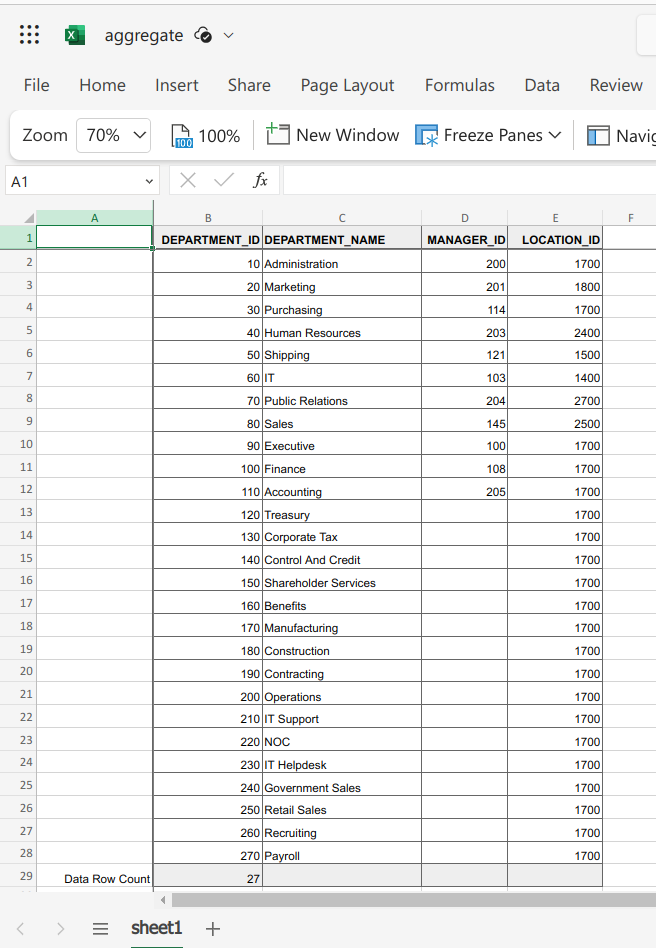

Once we retrieve the file and open it, we can see that indeed, it is in xlsx format :

As well as generating a vanilla spreadsheet, APEX_DATA_EXPORT does have a few more tricks up it’s sleeve…

Adding an AggregationWe can add a row count to the bottom of our report by means of the ADD_AGGREGATE procedure.

To do so, we need to modify the report query to produce the data to be used by the aggregate :

select department_id, department_name, manager_id, location_id,

count( 1) over() as record_count

from departments';

As we don’t want to list the record count on every row, we need to exclude the record_count column from the result set by specifying the columns that we do actually want in the output. We can do this with calls to the ADD_COLUMN procedure :

apex_data_export.add_column( p_columns => v_columns, p_name => 'DEPARTMENT_ID');

apex_data_export.add_column( p_columns => v_columns, p_name => 'DEPARTMENT_NAME');

apex_data_export.add_column( p_columns => v_columns, p_name => 'MANAGER_ID');

apex_data_export.add_column( p_columns => v_columns, p_name => 'LOCATION_ID');

NOTE – column names passed in the P_NAME parameter in this procedure need to be in UPPERCASE.

Finally, we need to specify an aggregate itself using the ADD_AGGREGATE procedure :

apex_data_export.add_aggregate

(

p_aggregates => v_aggregates,

p_label => 'Data Row Count',

p_display_column => 'DEPARTMENT_ID',

p_value_column => 'RECORD_COUNT'

);

The finished script is called excel_aggregate.sql :

set serverout on size unlimited

clear screen

declare

cursor c_apex_app is

select ws.workspace_id, ws.workspace, app.application_id, app.page_id

from apex_application_pages app

inner join apex_workspaces ws

on ws.workspace = app.workspace

order by page_id;

v_apex_app c_apex_app%rowtype;

v_stmnt varchar2(32000);

v_columns apex_data_export.t_columns;

v_aggregates apex_data_export.t_aggregates;

v_context apex_exec.t_context;

v_export apex_data_export.t_export;

-- File handling variables

v_dir all_directories.directory_name%type := 'HR_REPORTS';

v_fname varchar2(128) := 'aggregate.xlsx';

v_fh utl_file.file_type;

v_buffer raw(32767);

v_amount integer := 32767;

v_pos integer := 1;

v_length integer;

begin

-- We only need the first record returned by this cursor

open c_apex_app;

fetch c_apex_app into v_apex_app;

close c_apex_app;

apex_util.set_workspace(v_apex_app.workspace);

apex_session.create_session

(

p_app_id => v_apex_app.application_id,

p_page_id => v_apex_app.page_id,

p_username => 'anynameyoulike'

);

-- Add a row with a count of the records in the file

-- We need to add the relevant data to the query and then format it

v_stmnt :=

'select department_id, department_name, manager_id, location_id,

count( 1) over() as record_count

from departments';

-- Make sure only the data columns to display on the report, not the record_count

-- NOTE - in all of these procs, column names need to be passed as upper case literals

apex_data_export.add_column( p_columns => v_columns, p_name => 'DEPARTMENT_ID');

apex_data_export.add_column( p_columns => v_columns, p_name => 'DEPARTMENT_NAME');

apex_data_export.add_column( p_columns => v_columns, p_name => 'MANAGER_ID');

apex_data_export.add_column( p_columns => v_columns, p_name => 'LOCATION_ID');

apex_data_export.add_aggregate

(

p_aggregates => v_aggregates,

p_label => 'Data Row Count',

p_display_column => 'DEPARTMENT_ID',

p_value_column => 'RECORD_COUNT'

);

v_context := apex_exec.open_query_context

(

p_location => apex_exec.c_location_local_db,

p_sql_query => v_stmnt

);

v_export := apex_data_export.export

(

p_context => v_context,

p_format => apex_data_export.c_format_xlsx, -- XLSX

p_columns => v_columns,

p_aggregates => v_aggregates

);

apex_exec.close( v_context);

v_length := dbms_lob.getlength( v_export.content_blob);

v_fh := utl_file.fopen( v_dir, v_fname, 'wb', 32767);

while v_pos <= v_length loop

dbms_lob.read( v_export.content_blob, v_amount, v_pos, v_buffer);

utl_file.put_raw(v_fh, v_buffer, true);

v_pos := v_pos + v_amount;

end loop;

utl_file.fclose( v_fh);

dbms_output.put_line('File written to HR_REPORTS');

exception when others then

dbms_output.put_line(sqlerrm);

if utl_file.is_open( v_fh) then

utl_file.fclose(v_fh);

end if;

end;

/

When we run this, we now get a row count row at the bottom of the file :

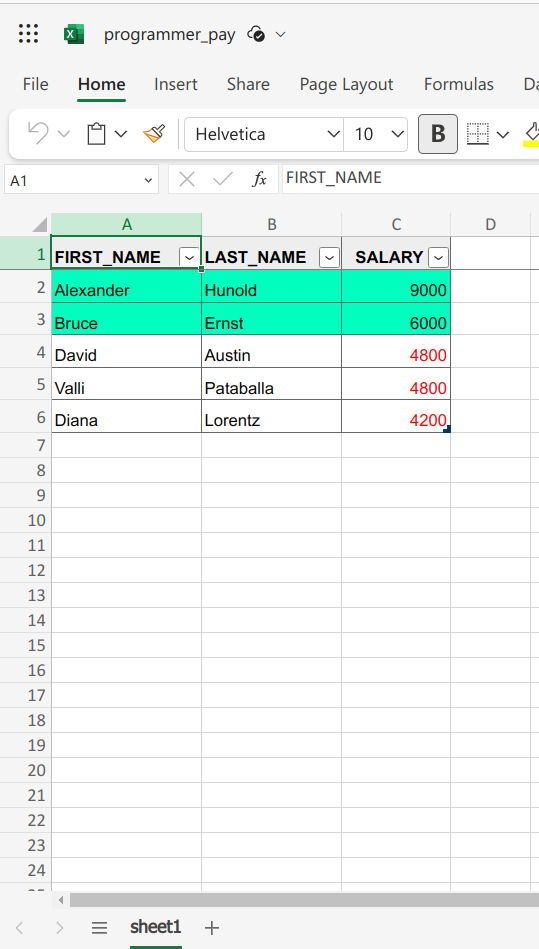

Highlighting cells and rows

Highlighting cells and rows

The ADD_HIGHLIGHT procedure allows us to apply conditional formatting to individual cells, or even entire rows.

Once again the values to determine this behaviour need to be included in the report query.

In this case, we specify a highlight_id value to be used in rendering the row.

This time, I have a new query and I want to apply formatting based on the value of the SALARY column.

If the value is below 6000 then I want to make the text red ( by applying highlighter_id 1).

Otherwise, I want to set the background colour to green for the entire row ( highlighter_id 2).

The query therefore is this :

select first_name, last_name, salary,

case when salary < 6000 then 1 else 2 end as fair_pay

from employees

where job_id = 'IT_PROG'

Highlight 1 is :

-- text in red for id 1

apex_data_export.add_highlight(

p_highlights => v_highlights,

p_id => 1,

p_value_column => 'FAIR_PAY',

p_display_column => 'SALARY',

p_text_color => '#FF0000' );

…and highlight 2 is :

-- Whole row with green background for id 2

apex_data_export.add_highlight

(

p_highlights => v_highlights,

p_id => 2,

p_value_column => 'FAIR_PAY',

p_text_color => '#000000', -- black

p_background_color => '#00ffbf' -- green

);

The finished script is excel_highlight.sql :

set serverout on size unlimited

clear screen

declare

cursor c_apex_app is

select ws.workspace_id, ws.workspace, app.application_id, app.page_id

from apex_application_pages app

inner join apex_workspaces ws

on ws.workspace = app.workspace

order by page_id;

v_apex_app c_apex_app%rowtype;

v_stmnt varchar2(32000);

v_highlights apex_data_export.t_highlights;

v_context apex_exec.t_context;

v_export apex_data_export.t_export;

v_dir all_directories.directory_name%type := 'HR_REPORTS';

v_fname varchar2(128) := 'programmer_pay.xlsx';

v_fh utl_file.file_type;

v_buffer raw(32767);

v_amount integer := 32767;

v_pos integer := 1;

v_length integer;

begin

open c_apex_app;

fetch c_apex_app into v_apex_app;

close c_apex_app;

apex_util.set_workspace(v_apex_app.workspace);

apex_session.create_session

(

p_app_id => v_apex_app.application_id,

p_page_id => v_apex_app.page_id,

p_username => 'anynameyoulike'

);

-- Add a row with a count of the records in the file

-- We need to add the relevant data to the query and then format it

v_stmnt :=

q'[select first_name, last_name, salary,

case when salary < 6000 then 1 else 2 end as fair_pay

from employees

where job_id = 'IT_PROG']';

-- text in red for id 1

apex_data_export.add_highlight(

p_highlights => v_highlights,

p_id => 1,

p_value_column => 'FAIR_PAY',

p_display_column => 'SALARY',

p_text_color => '#FF0000' );

-- Whole row with green background for id 2

apex_data_export.add_highlight

(

p_highlights => v_highlights,

p_id => 2,

p_value_column => 'FAIR_PAY',

p_text_color => '#000000', -- black

p_background_color => '#00ffbf' -- green

);

v_context := apex_exec.open_query_context

(

p_location => apex_exec.c_location_local_db,

p_sql_query => v_stmnt

);

-- Pass the highlights object into the export

v_export := apex_data_export.export

(

p_context => v_context,

p_format => apex_data_export.c_format_xlsx,

p_highlights => v_highlights

);

apex_exec.close( v_context);

v_length := dbms_lob.getlength( v_export.content_blob);

v_fh := utl_file.fopen( v_dir, v_fname, 'wb', 32767);

while v_pos <= v_length loop

dbms_lob.read( v_export.content_blob, v_amount, v_pos, v_buffer);

utl_file.put_raw(v_fh, v_buffer, true);

v_pos := v_pos + v_amount;

end loop;

utl_file.fclose( v_fh);

dbms_output.put_line('File written to HR_REPORTS');

exception when others then

dbms_output.put_line(sqlerrm);

if utl_file.is_open( v_fh) then

utl_file.fclose(v_fh);

end if;

end;

/

…and the output…

Formatting with GET_PRINT_CONFIG

Formatting with GET_PRINT_CONFIG

The GET_PRINT_CONFIG function offers a plethora of document formatting options…and a chance for me to demonstrate that I’m really more of a back-end dev.

To demonstrate just some of the available options, I have :

- changed the header and body font family to Times (default is Helvetica)

- set the heading text to be White and bold

- set the header background to be Dark Gray ( or Grey, if you prefer)

- set the body background to be Turquoise

- set the body font colour to be Midnight Blue

All of which looks like this :

v_print_config := apex_data_export.get_print_config

(

p_header_font_family => apex_data_export.c_font_family_times, -- Default is "Helvetica"

p_header_font_weight => apex_data_export.c_font_weight_bold, -- Default is "normal"

p_header_font_color => '#FFFFFF', -- White

p_header_bg_color => '#2F4F4F', -- DarkSlateGrey/DarkSlateGray

p_body_font_family => apex_data_export.c_font_family_times,

p_body_bg_color => '#40E0D0', -- Turquoise

p_body_font_color => '#191970' -- MidnightBlue

);

Note that, according to the documentation, GET_PRINT_CONFIG will also accept HTML colour names or RGB codes.

Anyway, the script is called excel_print_config.sql :

set serverout on size unlimited

clear screen

declare

cursor c_apex_app is

select ws.workspace_id, ws.workspace, app.application_id, app.page_id

from apex_application_pages app

inner join apex_workspaces ws

on ws.workspace = app.workspace

order by page_id;

v_apex_app c_apex_app%rowtype;

v_stmnt varchar2(32000);

v_context apex_exec.t_context;

v_print_config apex_data_export.t_print_config;

v_export apex_data_export.t_export;

-- File handling variables

v_dir all_directories.directory_name%type := 'HR_REPORTS';

v_fname varchar2(128) := 'crayons.xlsx';

v_fh utl_file.file_type;

v_buffer raw(32767);

v_amount integer := 32767;

v_pos integer := 1;

v_length integer;

begin

open c_apex_app;

fetch c_apex_app into v_apex_app;

close c_apex_app;

apex_util.set_workspace(v_apex_app.workspace);

apex_session.create_session

(

p_app_id => v_apex_app.application_id,

p_page_id => v_apex_app.page_id,

p_username => 'anynameyoulike'

);

v_stmnt := 'select * from departments';

v_context := apex_exec.open_query_context

(

p_location => apex_exec.c_location_local_db,

p_sql_query => v_stmnt

);

-- Let's do some formatting.

-- OK, let's just scribble with the coloured crayons...

v_print_config := apex_data_export.get_print_config

(

p_header_font_family => apex_data_export.c_font_family_times, -- Default is "Helvetica"

p_header_font_weight => apex_data_export.c_font_weight_bold, -- Default is "normal"

p_header_font_color => '#FFFFFF', -- White

p_header_bg_color => '#2F4F4F', -- DarkSlateGrey/DarkSlateGray

p_body_font_family => apex_data_export.c_font_family_times,

p_body_bg_color => '#40E0D0', -- Turquoise

p_body_font_color => '#191970' -- MidnightBlue

);

-- Specify the print_config in the export

v_export := apex_data_export.export

(

p_context => v_context,

p_format => apex_data_export.c_format_xlsx,

p_print_config => v_print_config

);

apex_exec.close( v_context);

v_length := dbms_lob.getlength( v_export.content_blob);

v_fh := utl_file.fopen( v_dir, v_fname, 'wb', 32767);

while v_pos <= v_length loop

dbms_lob.read( v_export.content_blob, v_amount, v_pos, v_buffer);

utl_file.put_raw(v_fh, v_buffer, true);

v_pos := v_pos + v_amount;

end loop;

utl_file.fclose( v_fh);

dbms_output.put_line('File written to HR_REPORTS');

exception when others then

dbms_output.put_line(sqlerrm);

if utl_file.is_open( v_fh) then

utl_file.fclose(v_fh);

end if;

end;

/

…and the resulting file is about as garish as you’d expect…

On the off-chance that you might prefer a more subtle colour scheme, you can find a list of HTML colour codes here.

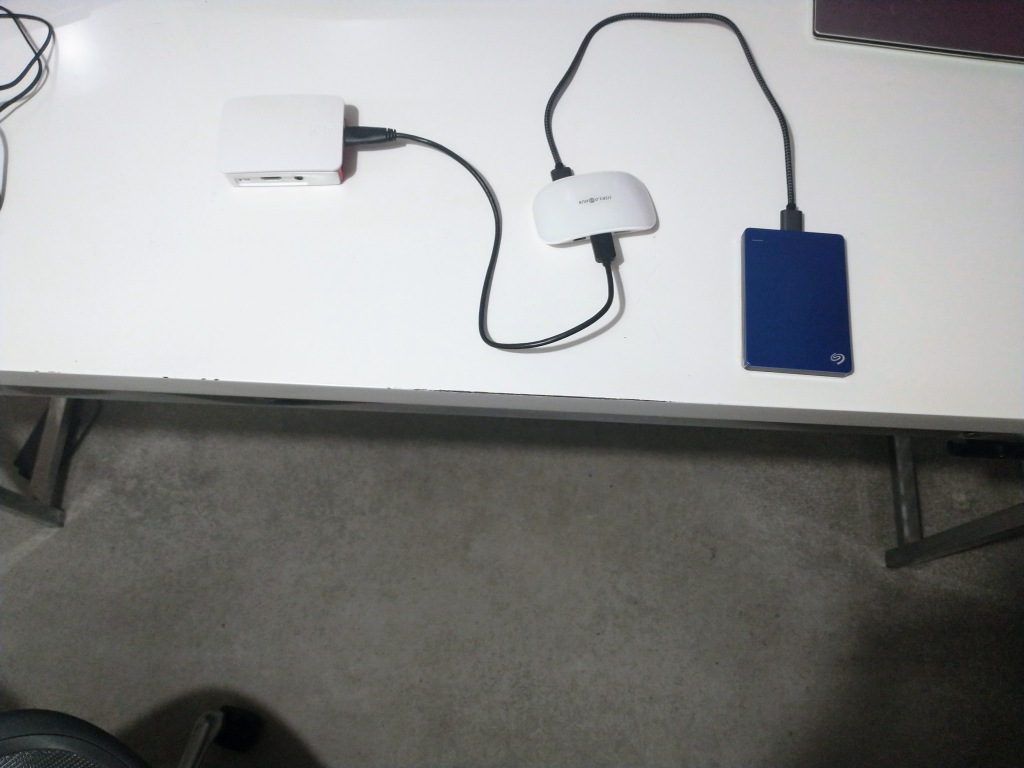

Pi-Eyed and Clueless – Adventures with Board and Flash Drive

Since setting up my Raspberry Pi as a Plex Media Server a few years ago, the reliable application of Moore’s Law has brought us to a point where a humble USB flash drive now has sufficient capacity to cope with all of my media files and then some.

Therefore, I felt it was probably time to retire the powered external HDD that had been doing service as storage and replace it with a nice, compact stick.

Existing Setup

Existing Setup

…to new setup in 3 easy steps ( and several hard ones)

…to new setup in 3 easy steps ( and several hard ones)

The Pi in question is a Pi3 and is running Raspbian 11 (Bullseye).

The main consideration here was that I wanted to ensure that the new storage was visible to Plex from the same location as before so that I didn’t have to go through the rigmarole of validating and correcting all of the metadata that Plex retrieves for each media file ( Movie details etc).

Having copied the relevant files from the HDD to the new Flash Drive, I was ready to perform this “simple” admin task.

The steps to accomplish this are set out below.

Also detailed are some of the issues I ran into and how I managed to solve them, just in case you’ve stumbled across this post in a desparate search for a solution to your beloved fruit-based computer having turned into a slice of Brick Pi. In fact, let’s start with…

Pi not booting after editing /etc/fstabOn one occasion, after editing the fstab file and rebooting, I found the Pi unresponsive.

Having connected a display and keyboard to the Pi and starting it again, I was greeted with the message :

"You are in emergency mode. After logging in, type "journalctl -xb" to view system logs, "systemctl reboot to reboot, "systemctl default" or ^D to try again to boot into the default mode.

Press Enter to continue

…and after pressing enter…

"Cannot open access to console, the root accoutn is locked.

See sulogin(8) man page for more details.

Press Enter to continue...

…after which pressing Enter seems to have little effect.

According to this Stack Exchange thread, one solution would be to remove the SD card from the Pi, mount it on a suitable filesystem on alternative hardware ( e.g. a laptop) and edit the /etc/fstab file to remove the offending entry.

I took the other route…

Start by Rebooting and hold down the [Shift] key so that Raspbian takes you to the Noobs screen.

Now, from the top menu, select Edit config. This opens an editor with two tabs – config and cmdline.txt

We want to edit the cmdline.txt so click on that tab.

Despite appearances, this file is all on a single line so when you append to it, you need to add a space at the start and not a newline. To persuade Raspbian to give us access to a command prompt, we need to append :

init=/bin/shNow, save the file then choose Exit (Esc) from the NOOBS menu so that the Pi continues to boot.

You should now be resarded with a ‘#’ prompt.

At this point, the filesystem is mounted but is read only. As we want to fix the issue by editing (i.e. writing to) the /etc/fstab file, we need to remount it in read/write mode. We can do this by typing the following at the prompt :

mount -n -o remount,rw /Now, finally, I can use nano to remove the offending entry from /etc/fstab

nano /etc/fstabAt this point, you have the option of editing the cmdline.txt so that the Pi starts normally, by editing the file :

nano /boot/cmdline.txtAlternatively, you may prefer to let the Pi restart and hold down [SHIFT] and do the edit in the NOOBs screen.

You would then exit NOOBS and the Pi should boot normally.

Either way, we can reboot by typing :

reboot…at the prompt.

Hopefully, you won’t need to go through any of this pain as what follows did work as expected…

Unmounting the old driveFirst of all, we need to identify the device :

sudo fdisk -l |grep /dev/sdaIn my case, there’s just one device – /dev/sda1.

Now I need to unmount it :

umount /dev/sda1We then need to ensure that it isn’t mounted next time we boot

to do this, we need to edit /etc/fstab and comment out the appropriate entry :

sudo nano /etc/fstabRather than deleting it altogether, I’ve just commented it out the relevant line, in case I need to put it back for any reason :

# Below is entry for Seagate external HDD via a powered USB Hub

#UUID=68AE9F66AE9F2C16 /mnt/usbstorage ntfs nofail,uid=pi,gid=pi 0 0

In order to make sure that this has all worked as expected, I physically disconnect the external HDD from the pi and restart it with

sudo rebootWell, these days the simplest way to mount the drive is just to plug it in to one of the USB ports on the Pi. Raspbian then automagically mounts it to :

/media/pi

Initially, I thought I’d try to simply create a symbolic link under the now empty mount point where the external HDD used to live :

ln -s ../../media/pi/SanDisk/Media /mnt/usbstorage…and then restart the Plex Server…

sudo service plexmediaserver stop

sudo service plexmediaserver startUnfortunately, whilst we don’t get any errors on the command line, the Plex itself refuses to read the link and insisted that all of the media files under /mnt/usbstorage are “Unavailable”.

Therefore, we need to do things properly and explicitly mount the Flash Drive to the desired mount point.

First of all, we need to confirm the type of filesystem on the drive we want to mount.

Whilst fdisk gives us the device name, it seems to be hedging it’s bets as regards the filesystem type :

sudo fdisk -l |grep /dev/sda

Disk /dev/sda: 466.3 GiB, 500648902656 bytes, 977829888 sectors

/dev/sda1 7552 977829887 977822336 466.3G 7 HPFS/NTFS/exFAT

Fortunately, there’s a more reliable way to confirm the type, which gives as the UUID for the Flash Drive as well…

sudo blkid /dev/sda1

/dev/sda1: LABEL="SanDisk" UUID="8AF9-909A" TYPE="exfat" PARTUUID="c3072e18-01"

The type of the old external HDD was NTFS, so it’s likely I’ll need to download some packages to add exFat support on the pi :

sudo apt install exfat-fuse

sudo apt install exfat-utils

Once apt has done it’s thing and these packages are installed, we can then add an entry to fstab to mount the Flash Drive on the required directory every time the Pi starts :

sudo nano /etc/fstabThe line we need to add is in the format:

UUID=<flash drive UUID> <mount point> <file system type> <options> 0 0

The options I’ve chosen to specify are :

- defaults – use the default options rw, suid, dev, exec, auto, nouser and async

- users – Allow any user to mount and to unmount the filesystem, even when some other ordinary user mounted it.

- nofail – do not report errors for this device if it does not exist

- 0 – exclude from any system backup

- 0 – do not indluce this device in fsck check

So, my new entry is :

UUID=8AF9-909A /mnt/usbstorage exfat defaults,users,nofail 0 0

Once these changes are saved, we can check that the command will work :

unmount /dev/sda1

mount -a

If you don’t get any feedback from this command, it’s an indication that it worked as expected.

You should now be able to see the file system on the flash drive under /mnt/usbstorage :

ls -l /mnt/usbstorage/

total 2816

-rwxr-xr-x 1 root root 552605 Sep 23 2021 Install SanDisk Software.dmg

-rwxr-xr-x 1 root root 707152 Sep 23 2021 Install SanDisk Software.exe

drwxr-xr-x 5 root root 262144 Dec 31 16:19 Media

drwxr-xr-x 3 root root 262144 Apr 10 2018 other_plex_metadata

drwxr-xr-x 6 root root 262144 Mar 11 2017 Photos

-rwxr-xr-x 1 root root 300509 Sep 23 2021 SanDisk Software.pdf

One final step – reboot the pi once more to ensure that your mount is permanent.

With that done, I should be able to continue enjoying my media server, but with substantially less hardware.

ReferencesThere are a couple of sites that may be of interest :

There is an explanation of the fstab options here.

You can find further details on the cmdline.txt file here.

Using STANDARD_HASH to generate synthetic key values

In Oracle, identity columns are a perfect way of generating a synthetic key value as the underlying sequence will automatically provide you with a unique value every time it’s invoked, pretty much forever.

One minor disadvantage of sequence generated key values is that you cannot predict what they will be ahead of time.

This may be a bit of an issue if you need to provide traceability between an aggregated record and it’s component records, or if you want to update an existing aggregation in a table without recalculating it from scratch.

In such circumstances you may find yourself needing to write the key value back to the component records after generating the aggregation.

Even leaving aside the additional coding effort required, the write-back process may be quite time consuming.

This being the case, you may wish to consider an alternative to the sequence generated key value and instead, use a hashing algorithm to generate a unique key before creating the aggregation.

That’s your skeptical face.

You’re clearly going to take some convincing that this isn’t a completely bonkers idea.

Well, if you can bear with me, I’ll explain.

Specifically, what I’ll look at is :

- using the STANDARD_HASH function to generate a unique key

- the chances of the same hash being generated for different values

- before you rush out to buy a lottery ticket ( part one) – null values

- before you rush out to buy a lottery ticket (part two) – date formats

- before you rush out to buy a lottery ticket (part three) – synching the hash function inputs with the aggregation’s group by

- a comparison between the Hashing methods that can be used with STANDARD_HASH

Just before we dive in, I should mention Dani Schnider’s comprehensive article on this topic, which you can find here.

Example TableI have a table called DISCWORLD_BOOKS_STAGE – a staging table that currently contains :

select *

from discworld_books_stage;

TITLE PAGES SUB_SERIES YOUNG_ADULT_FLAG

---------------------- ---------- ---------------------- ----------------

The Wee Free Men 404 TIFFANY ACHING Y

Monstrous Regiment 464 OTHER

A Hat Full of Sky 298 TIFFANY ACHING Y

Going Postal 483 INDUSTRIAL REVOLUTION

Thud 464 THE WATCH

Wintersmith 388 TIFFANY ACHING Y

Making Money 468 INDUSTRIAL REVOLUTION

Unseen Academicals 533 WIZARDS

I Shall Wear Midnight 434 TIFFANY ACHING Y

Snuff 370 THE WATCH

Raising Steam 372 INDUSTRIAL REVOLUTION

The Shepherd's Crown 338 TIFFANY ACHING Y

I want to aggregate a count of the books and total number of pages by Sub-Series and whether the book is a Young Adult title and persist it in a new table, which I’ll be updating periodically as new data arrives.

Using the STANDARD_HASH function, I can generate a unique value for each distinct sub_series, young_adult_flag value combination by running :

select

standard_hash(sub_series||'#'||young_adult_flag||'#') as cid,

sub_series,

young_adult_flag,

sum(pages) as total_pages,

count(title) as number_of_books

from discworld_books_stage

group by sub_series, young_adult_flag

order by 1

/

CID SUB_SERIES YA TOTAL_PAGES NO_BOOKS

---------------------------------------- ------------------------- -- ----------- ----------

08B0E5ECC3FD0CDE6732A9DBDE6FF2081B25DBE2 WIZARDS 533 1

8C5A3FA1D2C0D9ED7623C9F8CD5F347734F7F39E INDUSTRIAL REVOLUTION 1323 3

A7EFADC5EB4F1C56CB6128988F4F25D93FF03C4D OTHER 464 1

C66E780A8783464E89D674733EC16EB30A85F5C2 THE WATCH 834 2

CE0E74B86FEED1D00ADCAFF0DB6DFB8BB2B3BFC6 TIFFANY ACHING Y 1862 5

OK, so we’ve managed to get unique values across a whole seven rows. But, lets face it, generating a synthetic key value in this way does introduce the risk of a duplicate hash being generated for multiple unique records.

As for how much of a risk…

Odds on a collisionIn hash terms, generating the same value for two different inputs is known as a collision.

The odds on this happening for each of the methods usable with STANDARD_HASH are :

MethodCollision OddsMD52^64SHA12^80SHA2562^128SHA3842^192SHA5122^256Figures taken from https://en.wikipedia.org/wiki/Hash_function_security_summaryBy default, STANDARD_HASH uses SHA1. The odds of a SHA1 collision are :

1 in 1,208,925,819,614,629,174,706,176

By comparison, winning the UK Lottery Main Draw is pretty much nailed on at odds of

1 in 45,057,474

So, if you do happen to come a cropper on that 12 octillion ( yes, that’s really a number)-to-one chance then your next move may well be to run out and by a lottery ticket.

Before you do, however, it’s worth checking to see that you haven’t fallen over one or more of the following…

Remember that the first argument we pass in to the STANDARD_HASH function is a concatenation of values.

If we have two nullable columns together we may get the same concatenated output where one of the values is null :

with nulltest as (

select 1 as id, 'Y' as flag1, null as flag2 from dual union all

select 2, null, 'Y' from dual

)

select id, flag1, flag2,

flag1||flag2 as input_string,

standard_hash(flag1||flag2) as hash_val

from nulltest

/

ID F F INPUT_STRING HASH_VAL

---------- - - ------------ ----------------------------------------

1 Y Y 23EB4D3F4155395A74E9D534F97FF4C1908F5AAC

2 Y Y 23EB4D3F4155395A74E9D534F97FF4C1908F5AAC

To resolve this, you can use the NVL function on each column in the concatenated input to the STANDARD_HASH function.

However, this is likely to involve a lot of typing if you have a large number of columns.

Instead, you may prefer to simply concatenate a single character after each column:

with nulltest as (

select 1 as id, 'Y' as flag1, null as flag2 from dual union all

select 2, null, 'Y' from dual

)

select id, flag1, flag2,

flag1||'#'||flag2||'#' as input_string,

standard_hash(flag1||'#'||flag2||'#') as hash_val

from nulltest

/

ID F F INPUT_STRING HASH_VAL

---------- - - ------------ ----------------------------------------

1 Y Y## 2AABF2E3177E9A5EFBD3F65FCFD8F61C3C355D67

2 Y #Y# F84852DE6DC29715832470A40B63AA4E35D332D1

Whilst concatenating a character into the input string does solve the null issue, it does mean we also need to consider…

Date FormatsIf you just pass a date into STANDARD_HASH, it doesn’t care about the date format :

select

sys_context('userenv', 'nls_date_format') as session_format,

standard_hash(trunc(sysdate))

from dual;

SESSION_FORMAT STANDARD_HASH(TRUNC(SYSDATE))

-------------- ----------------------------------------

DD-MON-YYYY 9A2EDB0D5A3D69D6D60D6A93E04535931743EC1A

alter session set nls_date_format = 'YYYY-MM-DD';

select

sys_context('userenv', 'nls_date_format') as session_format,

standard_hash(trunc(sysdate))

from dual;

SESSION_FORMAT STANDARD_HASH(TRUNC(SYSDATE))

-------------- ----------------------------------------

YYYY-MM-DD 9A2EDB0D5A3D69D6D60D6A93E04535931743EC1A

However, if the date is part of a concatenated value, the NLS_DATE_FORMAT will affect the output value as the date is implicitly converted to a string…

alter session set nls_date_format = 'DD-MON-YYYY';

Session altered.

select standard_hash(trunc(sysdate)||'XYZ') from dual;

STANDARD_HASH(TRUNC(SYSDATE)||'XYZ')

----------------------------------------

DF0A192333BDF860AAB338C66D9AADC98CC2BA67

alter session set nls_date_format = 'YYYY-MM-DD';

Session altered.

select standard_hash(trunc(sysdate)||'XYZ') from dual;

STANDARD_HASH(TRUNC(SYSDATE)||'XYZ')

----------------------------------------

FC2999F8249B89FE88D4C0394CC114A85DAFBBEF

Therefore, it’s probably a good idea to explicitly set the NLS_DATE_FORMAT in the session before generating the hash.

Use the same columns in the STANDARD_HASH as you do in the GROUP BYI have another table called DISCWORLD_BOOKS :

select sub_series, main_character

from discworld_books

where sub_series = 'DEATH';

SUB_SERIES MAIN_CHARACTER

-------------------- ------------------------------

DEATH MORT

DEATH DEATH

DEATH DEATH

DEATH SUSAN STO HELIT

DEATH LU TZE

If I group by SUB_SERIES and MAIN_CHARACTER, I need to ensure that I include those columns as input into the STANDARD_HASH function.

Otherwise, I’ll get the same hash value for different groups.

For example, running this will give us the same hash for each aggregated row in the result set :

select

sub_series,

main_character,

count(*),

standard_hash(sub_series||'#') as cid

from discworld_books

where sub_series = 'DEATH'

group by sub_series, main_character,

standard_hash(sub_series||main_character)

order by 1

/

SUB_SERIES MAIN_CHARACTER COUNT(*) CID

-------------------- ------------------------------ ---------- ----------------------------------------

DEATH MORT 1 5539A1C5554935057E60CBD021FBFCD76CB2EB93

DEATH DEATH 2 5539A1C5554935057E60CBD021FBFCD76CB2EB93

DEATH LU TZE 1 5539A1C5554935057E60CBD021FBFCD76CB2EB93

DEATH SUSAN STO HELIT 1 5539A1C5554935057E60CBD021FBFCD76CB2EB93

What we’re actually looking for is :

select

sub_series,

main_character,

count(*),

standard_hash(sub_series||'#'||main_character||'#') as cid

from discworld_books

where sub_series = 'DEATH'

group by sub_series, main_character,

standard_hash(sub_series||main_character)

order by 1

/

SUB_SERIES MAIN_CHARACTER COUNT(*) CID

-------------------- ---------------------- -------- ----------------------------------------

DEATH MORT 1 01EF7E9D4032CFCD901BB2A5A3E2A3CD6A09CC18

DEATH DEATH 2 167A14D874EA960F6DB7C2989A3E9DE07FAF5872

DEATH LU TZE 1 5B933A07FEB85D6F210825F9FC53F291FB1FF1AA

DEATH SUSAN STO HELIT 1 0DA3C5B55F4C346DFD3EBC9935CB43A35933B0C7

We’re going to populate this table :

create table discworld_subseries_aggregation

(

cid varchar2(128),

sub_series varchar2(50),

young_adult_flag varchar2(1),

number_of_books number,

total_pages number

)

/…with the current contents of the DISCWORLD_BOOKS_STAGE table from earlier. We’ll then cleardown the staging table, populate it with a new set of data and then merge it into this aggregation table.

alter session set nls_date_format = 'DD-MON-YYYY';

merge into discworld_subseries_aggregation agg

using

(

select

cast(standard_hash(sub_series||'#'||young_adult_flag||'#') as varchar2(128)) as cid,

sub_series,

young_adult_flag,

count(title) as number_of_books,

sum(pages) as total_pages

from discworld_books_stage

group by sub_series, young_adult_flag

) stg

on ( agg.cid = stg.cid)

when matched then update

set agg.number_of_books = agg.number_of_books + stg.number_of_books,

agg.total_pages = agg.total_pages + stg.total_pages

when not matched then insert ( cid, sub_series, young_adult_flag, number_of_books, total_pages)

values( stg.cid, stg.sub_series, stg.young_adult_flag, stg.number_of_books, stg.total_pages)

/

commit;

truncate table discworld_books_stage;

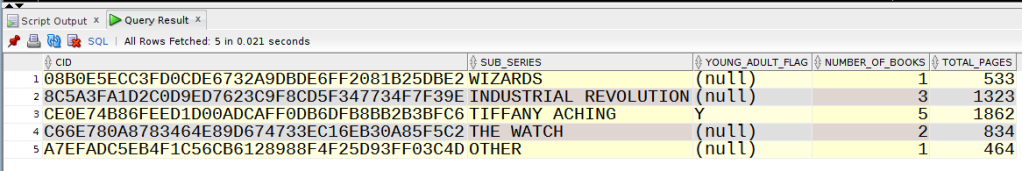

Once we’re run this, the conents of DISCWORLD_SUBSERIES_AGGREGATION is :

Next, we insert the rest of the Discworld books into the staging table :

And run the merge again :

alter session set nls_date_format = 'DD-MON-YYYY';

merge into discworld_subseries_aggregation agg

using

(

select

cast(standard_hash(sub_series||'#'||young_adult_flag||'#') as varchar2(128)) as cid,

sub_series,

young_adult_flag,

count(title) as number_of_books,

sum(pages) as total_pages

from discworld_books_stage

group by sub_series, young_adult_flag

) stg

on ( agg.cid = stg.cid)

when matched then update

set agg.number_of_books = agg.number_of_books + stg.number_of_books,

agg.total_pages = agg.total_pages + stg.total_pages

when not matched then insert ( cid, sub_series, young_adult_flag, number_of_books, total_pages)

values( stg.cid, stg.sub_series, stg.young_adult_flag, stg.number_of_books, stg.total_pages)

/

7 rows merged.

Relative Performance of Hashing Methods

Relative Performance of Hashing Methods

Whilst you may consider the default SHA1 method perfectly adequate for generating unique values, it may be of interest to examine the relative performance of the other available hashing algorithms.

For what it’s worth, my tests on an OCI Free Tier 19c instance using the following script were not that conclusive :

with hashes as

(

select rownum as id, standard_hash( rownum, 'MD5' ) as hash_val

from dual

connect by rownum <= 1000000

)

select hash_val, count(*)

from hashes

group by hash_val

having count(*) > 1

/

Running this twice for each method,replacing ‘MD5’ with each of the available algorithms in turn :

MethodBest Runtime (secs)MD50.939SHA11.263SHA2562.223SHA3842.225SHA5122.280I would imagine that, among other things, performance may be affected by the length of the input expression to the function.

Dr Who and Oracle SQL Pivot

Dr Who recently celebrated it’s 60th Anniversary and the BBC marked the occasion by making all episodes since 1963 available to stream.

This has given me the opportunity to relive those happy childhood Saturday afternoons spent cowering behind the sofa watching Tom Baker

take on the most fiendish adversaries that the Costume department could conjure up in the days before CGI.